NeuSE: Neural SE(3)-Equivariant Embedding for

Consistent Spatial Understanding with Objects

Abstract

We present NeuSE, a novel Neural SE(3)- Equivariant Embedding for objects, and illustrate how it supports object SLAM for consistent spatial understanding with long-term scene changes. NeuSE is a set of latent object embeddings created from partial object observations. It serves as a compact point cloud surrogate for complete object models, encoding full shape information while transforming SE(3)-equivariantly in tandem with the object in the physical world. With NeuSE, relative frame transforms can be directly derived from inferred latent codes. Using NeuSE for object shape and pose characterization, our proposed SLAM paradigm can operate independently or in conjunction with typical SLAM systems. It directly infers SE(3) camera pose constraints compatible with general SLAM pose graph optimization while maintaining a lightweight object-centric map that adapts to real-world changes. Our approach is evaluated on synthetic and real-world sequences featuring changed objects and shows improved localization accuracy and change-aware mapping capability, when working either standalone or jointly with a common SLAM pipeline.

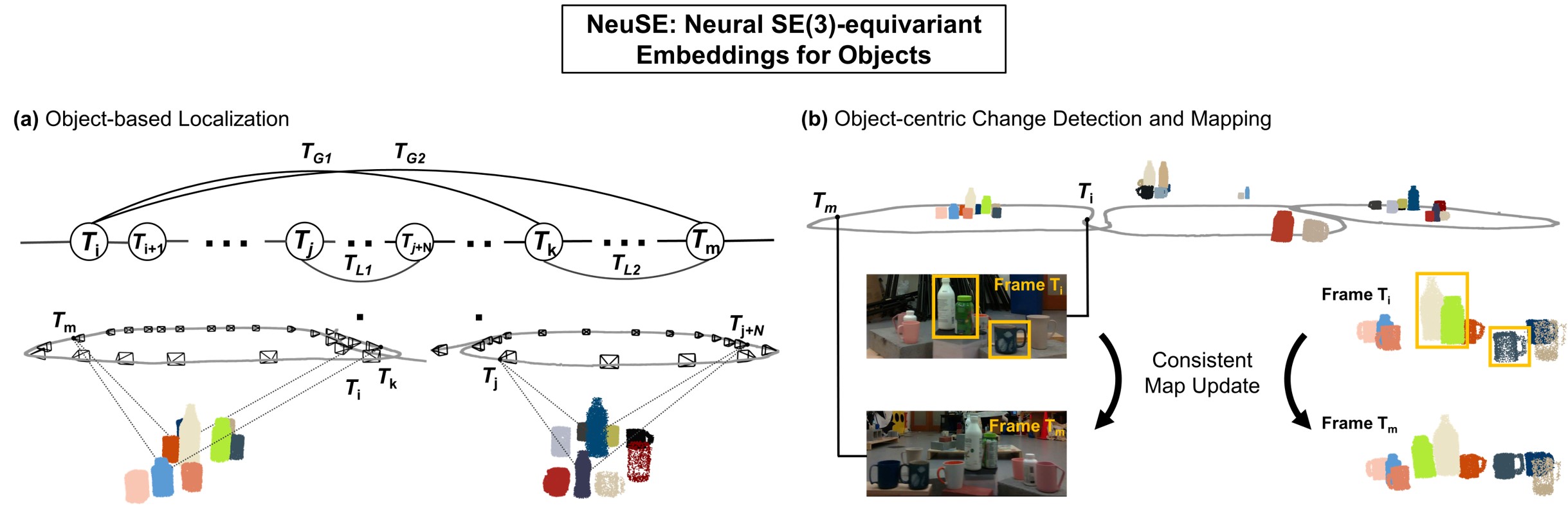

Approach Overview

The above schematic illustrates how we achieve consistent spatial understanding with NeuSE. Object-centric map

of

mugs and bottles constructed

from the real-world experiment is shown here for illustration.

(a) NeuSE acts as a compact point cloud surrogate for objects, encoding full object shapes and

transforming SE(3)-

equivariantly with the objects. Latent codes of bottles and mugs from different frames can be effectively

associated (dashed line) for direct computation of inter-frame transforms, which are then added to constrain

camera pose (Ti)

optimization both locally (TLi) and globally (TGi).

(b)

The system performs change-aware object-level mapping, where

changed objects are updated alongside unchanged ones with full shape

reconstructions in the object-centric map.

Result Highlights

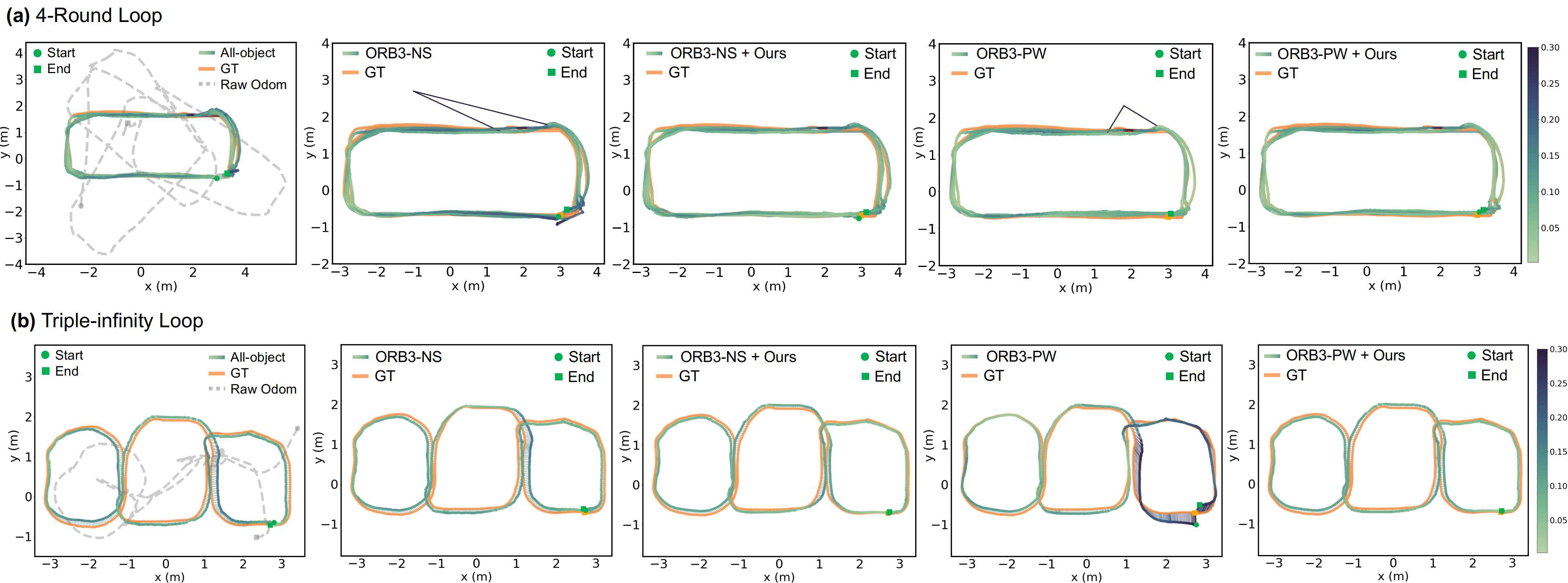

We evaluate the proposed algorithm on both synthetic and real-world sequences consisting of unseen instances of the bottle and mug categories, where objects are added, removed, and switched places to simulate environment changes in the long term. Below, we showcase the localization and mapping results of the proposed NeuSE-based object SLAM approach on two self-collected real-world sequences: the 4-Round loop and the Triple-infinity loop.

Localization with Temporal Scene Inconsistencies

The figure above displays various estimated camera trajectories compared to the ground truth (GT). The color bar on the right represents the Absolute Trajectory Error (ATE) value distribution along the trajectory.

(1) In column 1, the proposed object SLAM paradigm demonstrates its ability to sustain reasonable

localization performance when working standalone with only NeuSE-inferred inter-frame camera pose

constraints (objects available)

or noisy external odometry measurements (objects unavailable).

(2) In column 2-5, the proposed object SLAM paradigm shows its ability to improve localization accuracy

when

working jointly with typical SLAM systems (ORB-SLAM3 in this case). Specifically, in (a), the

integration of

our strategy helps prevent tracking failure, as seen by the spike-free trajectory estimates in column 3

and

5 compared to those in column 2 and 4. In (b), our strategy successfully eliminates the start and

end

point

drift, resulting in an improved trajectory estimate when revisiting the lower right part of the

environment, as indicated by the lighter color of the ATE value distribution in column 5 compared to

column

4.

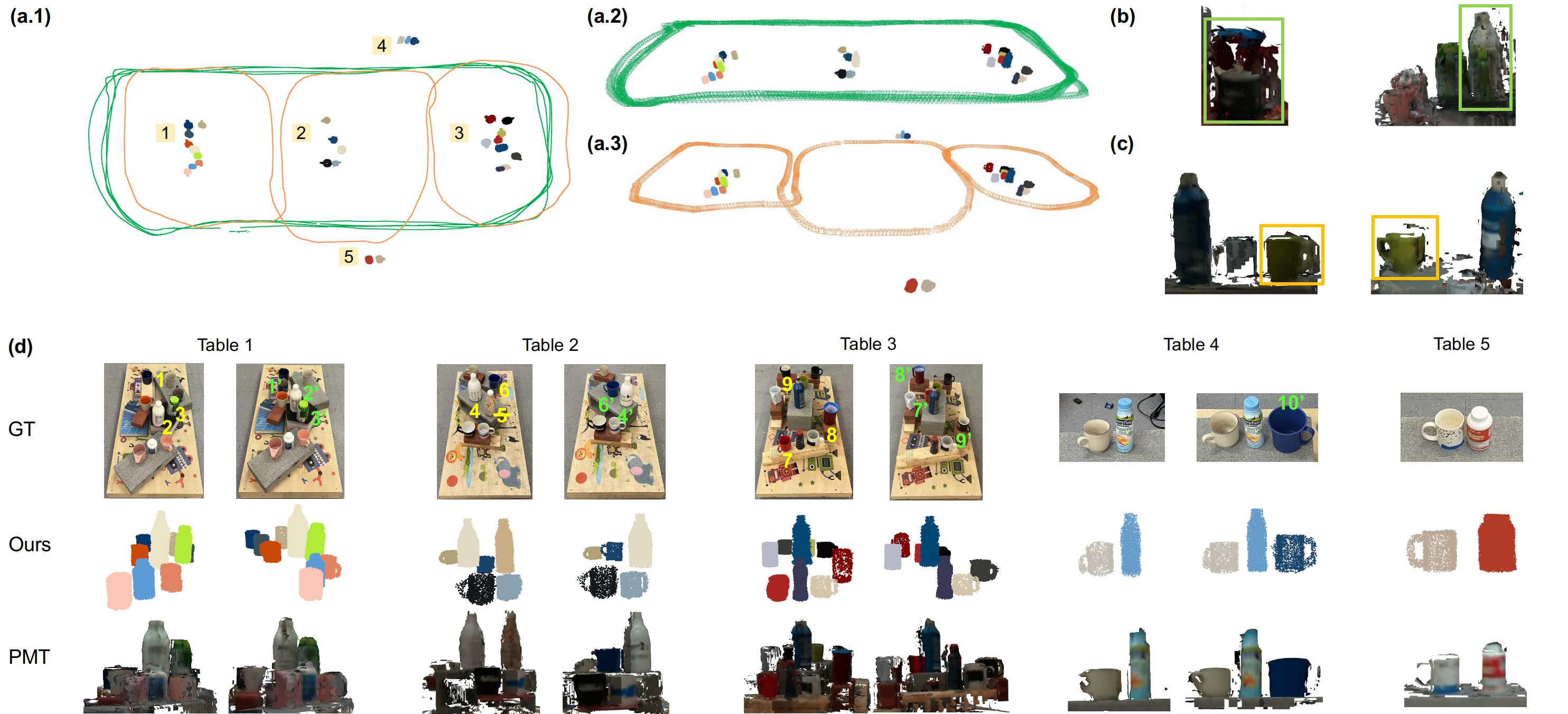

Change-aware Object-centric Mapping

The figure presented above illustrates the outcomes of our change-aware object-centric mapping technique applied to real-world sequences. Our proposed approach effectively maintains a consistent map of objects of interest in the environment, continuously updating it with the latest changes in a timely manner.

(a): (a.1) presents the constructed object-centric map alongside ground truth trajectories, demonstrating the qualitative spatial consistency between object reconstruction and actual camera motion. (a.2) and (a.3) show the full scene reconstruction against estimated trajectories with the lowest root mean squared error (RMSE) of absolute trajectory error (ATE) value.

(d): (d) showcases the evolution of the object layout on each table before and after changes, comparing ground truth scenes (GT), object-centric maps from our approach (Ours), and reconstructions from the chosen change detection baseline, Panoptic Multi-TSDF Mapping (PMT). Changed objects are numbered as n, with n′ representing their correspondence after changes or newly added objects, and

(b)-(c): We here highlight the limitations of PMT in change detection and reconstruction. (b) displays reconstruction artifacts of PMT with overlapping changed objects, such as the red and black mug (object 8 and 9 of table 3) and the white and green bottle (object 2 and 3 of table 1). (c) points out a false positive changed mug marked by PMT due to imperfect localization, where little overlap exists between the two sides of the green mug when viewed from different frames.

Team

Citation

@inproceedings{fu2023neuse,

title={NeuSE: Neural SE (3)-Equivariant Embedding for Consistent Spatial Understanding with Objects},

author={Fu, Jiahui and Du, Yilun and Singh, Kurran and Tenenbaum, Joshua B and Leonard, John J},

booktitle={Proceedings of Robotics: Science and Systems (RSS)},

year={2023}

}